Architecture and programming of the Media Streaming Infrastucture Video Subsystem and Audio/Video Synchronization:

After the idea for a hardware media player device was canceled, the team focused on developing the Media Streaming Infrastructure (MSI). This would be a large library of software modules which could be used by other parts of Intel who may, in the future, develop a variety of different media-related applications which would run on various versions of Intel hardware.

The MSI was planning to support things like: playing audio CDs, playing locally stored libraries of mp3 audio files, play DVD video discs, display photos from a photo CD, and even to play video or audio streamed across the internet (although that was beyond the speed of the internet at that time - this was in the era when there were things like Napster, where you could slowly download an mp3 file for a song to play locally later, but the internet was not even fast enough to stream a song, and nowhere near capable of streaming video). The video could be in any resolution, even HD, although, again this was a future feature - we even had to contract with specialized companies to produce custom high-definition video content for us to use in demonstrations because this was long before things like Blu-Ray had been proposed. So this was similar to the kind of software you would see now in a Roku or Firestick, but supporting even more variations in hardware and all done back in 1997.

My particular role in this effort was quite major (especially for someone who was in their first software job). I was given the task of creating the architecture and programming all of the modules for the video subsystem and also to invent a way to synchronize video and audio.

This was particularly challenging, because the MSI was designed to work on any operating system - to test how 'OS-agnostic' it was, we were developing the initial modules to work on both Windows and Linux. For the video components, we had to be able to run on multiple video specific chipsets, such as Intel's own internal graphics motherboard chips, on Nvidia's latest dedicated Graphics Processor boards, and on ATI's Rage HDTV chipset that was specific to displaying movies, as well as having the ability to emulate a video display system completely in software if no video accelerating hardware was present. We also had to support things like transparent image overlays on top of video to allow things like semi-transparent channel logo markings, and to support other overlays like Closed Captioning. This all made the Video Subsystem design quite complex...but also interesting.

But probably the most interesting part of this design for me was designing the audio/video synchronization system. It took awhile for me to come up with a plan for how to do this, and then it was tricky to implement because the way timing works in different operating systems is quite different (between Windows and Linux, for example) and this required me to learn how to write 'driver software' which is essentially specialized software that works in a way similar to the operating system itself, essentially as an 'extension' of the operating system.

I ended up implementing something like a hardware circuit phase-locked loop system except implemented in software. The result was something I felt was unique enough that I applied for a U.S. Patent on the synchronization mechanism. The way patents work at Intel, is that you first submit your idea to Intel's internal Patent Group, who reviews the idea and decides whether or not to submit it to the U.S. Patent system. If they do and the patent is awarded, Intel retains the rights to the design (because you are an employee) but they give the employee a $10,000 award. So I submitted my idea to the Intel Patent Group, and after review they decided not to advance it to the U.S. Patent office...not because it wasn't a good idea, but because they felt it was too close to the synchronization system that Microsoft had build into their DirectX software system, and they didn't want to risk getting into a patent dispute with Microsoft about it.

Our group did complete the MSI architecture and coding - we had 20 engineers working on it for close to 2 years, and I would estimate the whole MSI software library might have been a half a million lines of code or so. It was used in a number of public demonstrations, including being featured in a live demonstration presented by Craig Barrett, the then CEO of Intel at one of the Consumer Electronics Shows in Las Vegas.

We also used the MSI in various presentations at the Intel Developer's Forum in Tokyo, Japan and I was able to go to Tokyo on a few occasions to support these demonstrations. Here is a picture of one of these demonstrations in the Intel booth in Tokyo (I am seated on the stage, running the demo software)...

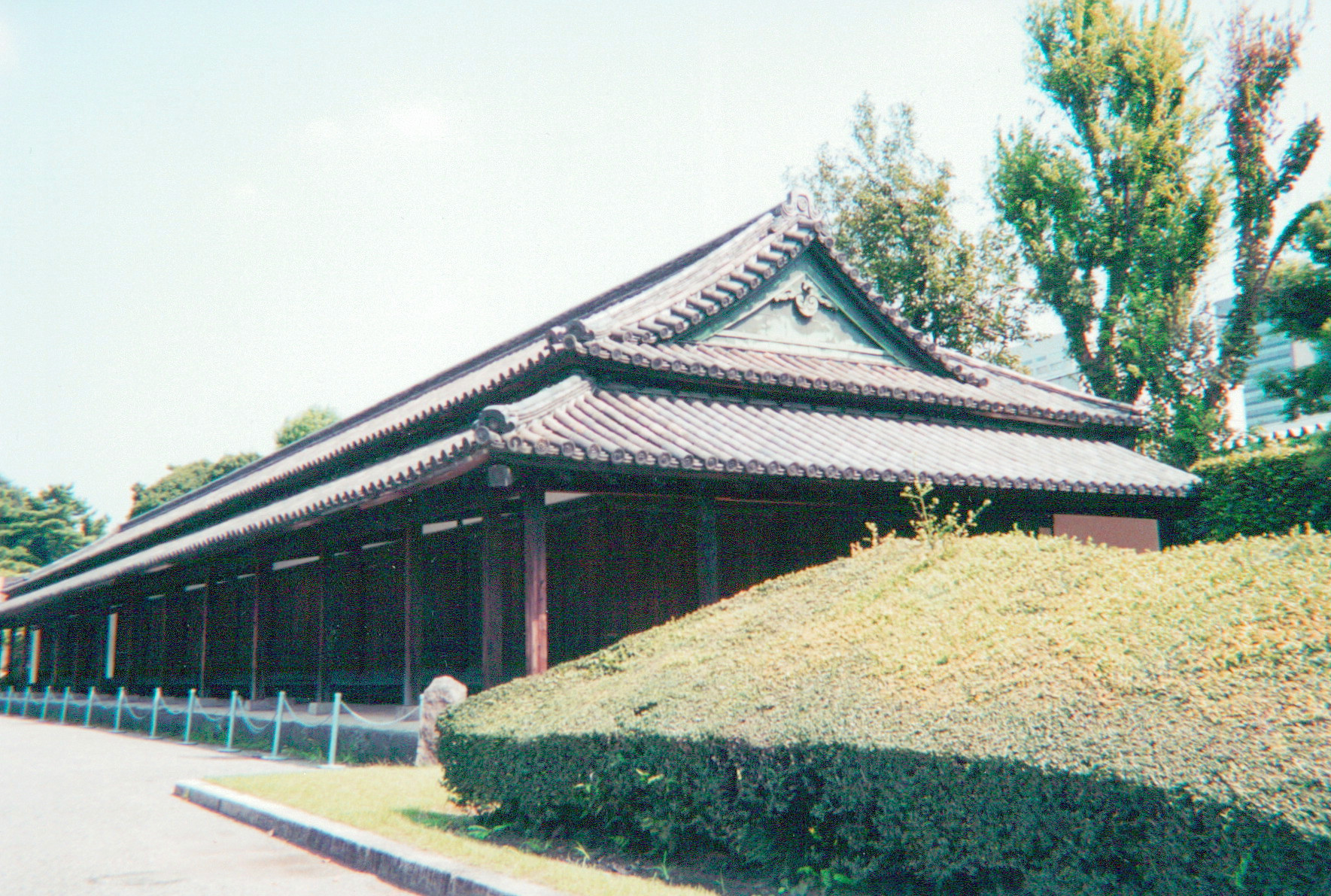

We also got to tour a lot of interesting places around Tokyo, unforuntely, I don't have many pictures, but I did find this one of a samurai bunkhouse on the ground of the Emperor's Palace:

This job came to an end when there was a downturn in the U.S. economy and Intel's sales in general were declining, so they were looking for ways to cut costs. Intel Labs was a large organization, and although it did useful research, it didn't directly produce products whose sales led directly to profits, so they decided to consolidate all of the Intel Labs in Oregon.

The Oregon part of Intel Labs also had a media-oriented group, and they were developing a streaming infrastructure, but one that only worked with audio - it had no capability of video, like our Media Streaming Infrastucture did. Even though our product was much more advanced and had been used in demonstrations featuring the CEO, the decision was made to close our lab in Arizona in favor of the lab in Oregon. So I was redeployed again along with all of the other engineers in my group.